Production-Ready Keycloak on Kubernetes with Auto-Clustering

The Challenge

Running Keycloak in production is notoriously challenging. Session loss during scaling, complex external cache configurations, and maintaining high availability while ensuring session persistence across multiple replicas are common pain points. Traditional approaches often require external Infinispan clusters or Redis, adding operational complexity and potential failure points.

Solution Overview

Instead of managing external caching systems, we can leverage Keycloak’s built-in clustering capabilities with Kubernetes-native service discovery. This approach uses JGroups with DNS-based discovery through headless services, enabling automatic session replication and seamless scaling without session loss.

The result? Auto-scaling Keycloak instances that maintain session state, support rolling updates without downtime, and require minimal operational overhead.

Implementation

Docker Foundation

Our production Dockerfile uses a multi-stage build approach to optimize the final image while enabling essential features for clustering:

ARG KC_VERSION=25.0.5

FROM quay.io/keycloak/keycloak:${KC_VERSION} AS builder

ARG KC_HEALTH_ENABLED=true

ARG KC_METRICS_ENABLED=true

ARG KC_DB=postgres

ARG KC_HTTP_RELATIVE_PATH=/

ENV KC_HEALTH_ENABLED=${KC_HEALTH_ENABLED}

ENV KC_METRICS_ENABLED=${KC_METRICS_ENABLED}

ENV KC_DB=${KC_DB}

ENV KC_HTTP_RELATIVE_PATH=${KC_HTTP_RELATIVE_PATH}

WORKDIR /opt/keycloak

RUN /opt/keycloak/bin/kc.sh build --features=account-api,account3,admin-api,admin2,authorization,ciba,client-policies,device-flow,impersonation,kerberos,login2,par,web-authn

FROM registry.access.redhat.com/ubi9:9.3 AS ubi-micro-build

RUN mkdir -p /mnt/rootfs

RUN dnf install -y curl-7.76.1 --installroot /mnt/rootfs --releasever 9 --setopt install_weak_deps=false --nodocs && \

dnf --installroot /mnt/rootfs clean all && \

rpm --root /mnt/rootfs -e --nodeps setup

FROM quay.io/keycloak/keycloak:${KC_VERSION}

COPY --from=builder /opt/keycloak/ /opt/keycloak/

COPY --from=ubi-micro-build /mnt/rootfs /

ENTRYPOINT ["/opt/keycloak/bin/kc.sh", "start", "--optimized"]

The build stage pre-compiles all necessary features, while the minimal UBI layer adds essential tools like curl for health checks.

Kubernetes Configuration

The magic happens in our Kubernetes setup. We separate sensitive configuration using Secrets while keeping operational settings in ConfigMaps:

Secret for sensitive data:

apiVersion: v1

kind: Secret

metadata:

name: keycloak-secret

type: Opaque

stringData:

KC_DB_PASSWORD: "pg-password"

KC_DB_USERNAME: "pg-user"

KEYCLOAK_ADMIN: "admin-user"

KEYCLOAK_ADMIN_PASSWORD: "admin-password"

KC_DB_URL: "jdbc:postgresql://postgresql-server:5432/keycloak-database"

Key clustering configurations:

KC_CACHE=ispnenables Infinispan clusteringKC_CACHE_STACK=kubernetesuses Kubernetes-native discoveryJAVA_OPTSincludes-Djgroups.dns.query=keycloak-headless.default.svc.cluster.local

The headless service is crucial for clustering - it allows JGroups to discover other Keycloak instances via DNS:

apiVersion: v1

kind: Service

metadata:

name: keycloak-headless

spec:

clusterIP: None

selector:

app: keycloak

ports:

- name: jgroups

port: 7800

targetPort: 7800

Complete Kubernetes Manifests

# secret.yaml

apiVersion: v1

kind: Secret

metadata:

name: keycloak-secret

namespace: default

type: Opaque

stringData:

KC_DB_PASSWORD: cGctcGFzc3dvcmQ= # pg-password

KC_DB_USERNAME: cGctdXNlcg== # pg-user

KEYCLOAK_ADMIN: YWRtaW4tdXNlcg== # admin-user

KEYCLOAK_ADMIN_PASSWORD: YWRtaW4tcGFzc3dvcmQ= # admin-password

KC_DB_URL: amRiYzpwb3N0Z3Jlc3FsOi8vcG9zdGdyZXNxbC1zZXJ2ZXI6NTQzMi9rZXljbG9hay1kYXRhYmFzZQ== # jdbc:postgresql://postgresql-server:5432/keycloak-database

---

# configmap.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: keycloak-config

namespace: default

data:

KC_DB: "postgres"

KC_LOG_LEVEL: "INFO"

KC_HTTP_ENABLED: "true"

KC_PROXY: "edge"

KC_METRICS_ENABLED: "true"

KC_HEALTH_ENABLED: "true"

KC_REALM_NAME: "master"

KC_HOSTNAME: "https://keycloak.karuhun.dev" # change to your domain

KC_PROXY_HEADERS: "xforwarded"

KC_HOSTNAME_BACKCHANNEL_DYNAMIC: "true"

KUBERNETES_NAMESPACE: "default"

KC_CACHE: "ispn"

KC_CACHE_STACK: "kubernetes"

CACHE_OWNERS: "2"

CACHE_SEGMENTS: "60"

KC_HOSTNAME_STRICT: "false"

JGROUPS_DISCOVERY_PROTOCOL: "kubernetes.KUBE_PING"

KC_HTTP_TIMEOUT: "300000"

KC_DB_POOL_INITIAL_SIZE: "20"

KC_DB_POOL_MAX_SIZE: "200"

KC_DB_POOL_MIN_SIZE: "10"

KC_HTTP_MAX_THREADS: "1000"

KC_HTTP_POOL_MAX_THREADS: "128"

KC_CACHE_STALE_CLEANUP_TIMEOUT: "3600"

KC_USER_SESSION_CACHE_EVICTION_ENABLED: "true"

KC_USER_SESSION_STATE_REPLICATION_TIMEOUT: "60000"

KC_DB_POOL_VALIDATION_TIMEOUT: "30000"

KC_TRANSACTION_XA_ENABLED: "false"

KC_HTTP_WORKER_THREADS: "500"

KC_HTTP_ACCEPTOR_THREADS: "2"

KC_HTTP_SELECTOR_THREADS: "2"

JAVA_OPTS: "-XX:MaxRAMPercentage=75.0 -XX:InitialRAMPercentage=50.0 \

-XX:+UseG1GC \

-XX:MaxGCPauseMillis=200 \

-XX:G1HeapRegionSize=8M \

-XX:InitiatingHeapOccupancyPercent=40 \

-XX:MaxMetaspaceSize=512m \

-XX:+AlwaysPreTouch \

-XX:NativeMemoryTracking=detail \

-Dorg.infinispan.unsafe.allow_jdk8_chm=true \

-Djava.net.preferIPv4Stack=true \

-Dorg.jboss.logging.provider=slf4j \

-Djgroups.dns.query=keycloak-headless.default.svc.cluster.local \

-Djboss.as.management.blocking.timeout=600 \

-Dvertx.disableMetrics=true \

-Dvertx.disableH2c=true"

---

# deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: keycloak

namespace: default

labels:

app: keycloak

spec:

replicas: 1

selector:

matchLabels:

app: keycloak

template:

metadata:

labels:

app: keycloak

spec:

imagePullSecrets:

- name: regcred # change to your registry creds

containers:

- name: keycloak

image: "vourteen14/keycloak:latest" # change with your image

imagePullPolicy: Always

env:

- name: KC_CLUSTER

value: "keycloak-cluster"

- name: PROXY_ADDRESS_FORWARDING

value: "true"

envFrom:

- configMapRef:

name: keycloak-config

- secretRef:

name: keycloak-secret

ports:

- name: http

containerPort: 8080

protocol: TCP

- name: jgroups

containerPort: 7800

protocol: TCP

- name: management

containerPort: 9000

protocol: TCP

livenessProbe:

httpGet:

path: /health/live

port: 9000

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 6

readinessProbe:

httpGet:

path: /health/ready

port: 9000

scheme: HTTP

initialDelaySeconds: 30

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 3

startupProbe:

httpGet:

path: /health/started

port: 9000

scheme: HTTP

initialDelaySeconds: 15

periodSeconds: 10

timeoutSeconds: 5

failureThreshold: 30

resources:

requests:

cpu: 100m

memory: 1Gi

limits:

cpu: 1000m # adjust with what you need

memory: 2Gi # adjust with what you need

---

# service.yaml

apiVersion: v1

kind: Service

metadata:

name: keycloak

namespace: default

labels:

app: keycloak

spec:

type: ClusterIP

selector:

app: keycloak

ports:

- port: 8080

targetPort: 8080

name: http

protocol: TCP

---

# headless-service.yaml

apiVersion: v1

kind: Service

metadata:

name: keycloak-headless

namespace: default

labels:

app: keycloak

spec:

clusterIP: None

selector:

app: keycloak

ports:

- name: http

port: 8080

targetPort: 8080

protocol: TCP

- name: jgroups

port: 7800

targetPort: 7800

protocol: TCP

---

# hpa.yaml

apiVersion: autoscaling/v2

kind: HorizontalPodAutoscaler

metadata:

name: keycloak-hpa

namespace: default

spec:

scaleTargetRef:

apiVersion: apps/v1

kind: Deployment

name: keycloak

minReplicas: 1

maxReplicas: 10

metrics:

- type: Resource

resource:

name: cpu

target:

type: Utilization

averageUtilization: 70

- type: Resource

resource:

name: memory

target:

type: Utilization

averageUtilization: 80

Deployment & Results

Deploy in this order to ensure proper service discovery:

kubectl apply -f secret.yaml

kubectl apply -f configmap.yaml

kubectl apply -f service.yaml

kubectl apply -f headless-service.yaml

kubectl apply -f deployment.yaml

kubectl apply -f hpa.yaml # optional

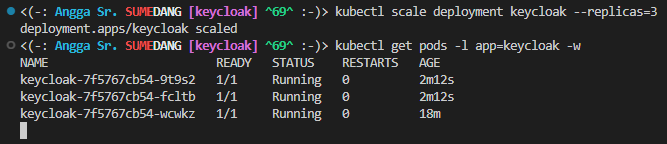

Test scaling and session persistence:

# Scale up to 3 replicas

kubectl scale deployment keycloak --replicas=3

# Verify all pods are running and clustered

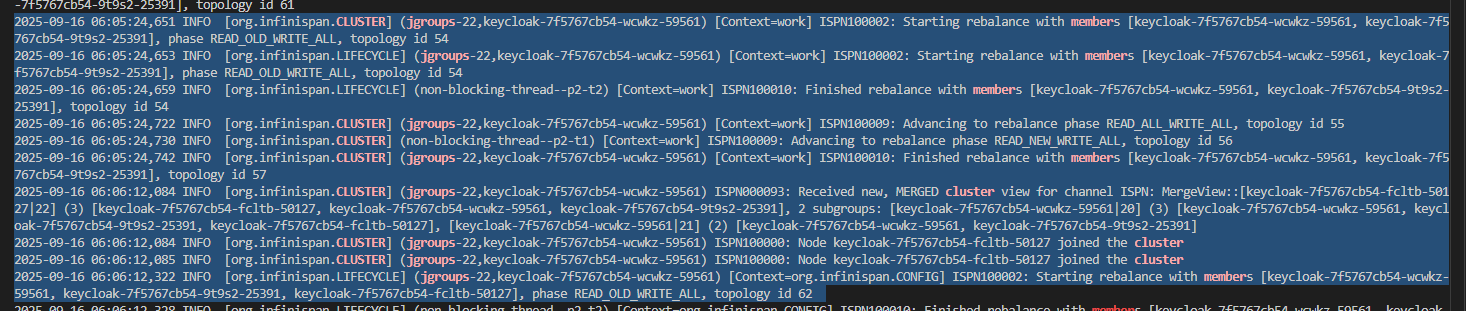

kubectl logs deployment/keycloak | grep -i "cluster\|jgroups\|member"

# Test session persistence during pod restart

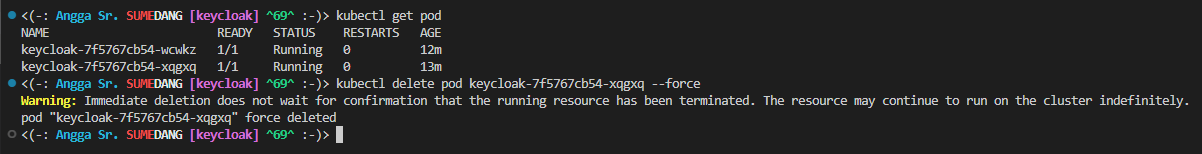

kubectl delete pod <pod> --force

Scaling to 3 replicas

Rebalance/sync logs process when adding new replica

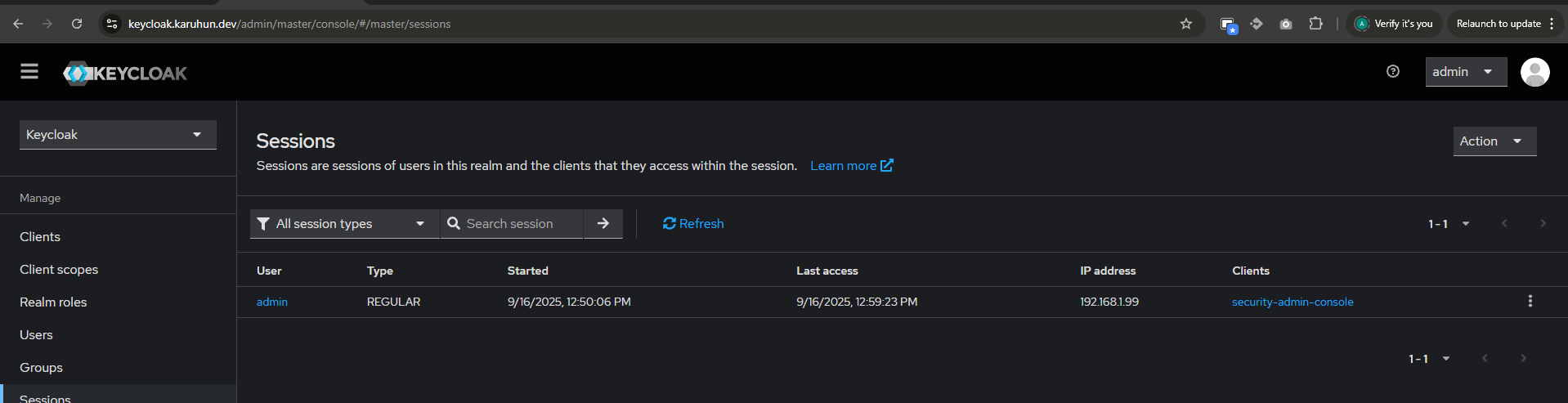

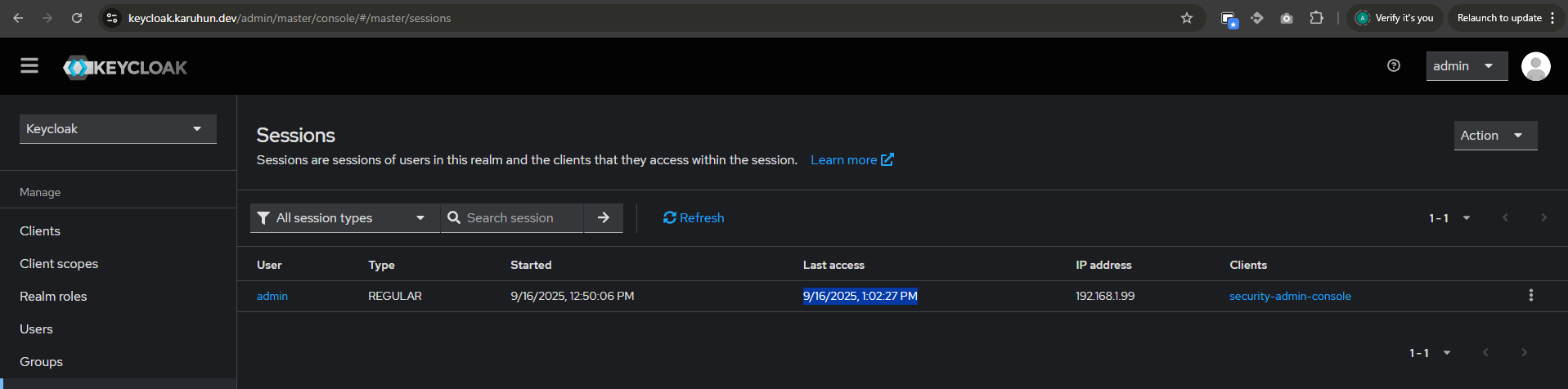

Keycloak session before force delete one of replica

Force delete one of keycloak replica

Keycloak session after force delete one of replica

The deployment automatically handles session replication. Users remain logged in even when pods are terminated or during rolling updates.

Key Takeaways

This approach delivers enterprise-grade Keycloak clustering without external dependencies. Session persistence works out-of-the-box, scaling is seamless, and operational complexity is minimal. The DNS-based discovery is more reliable than pod-based approaches and doesn’t require additional RBAC permissions.

On real-world production deployments serving 10k+ concurrent users, this setup runs efficiently with 8 pods at 4-6GB RAM each. The optimized cache configuration (CACHE_OWNERS: "2", CACHE_SEGMENTS: "60") significantly reduces memory consumption compared to default Keycloak clustering while maintaining full high availability.

This architecture has demonstrated rock-solid stability during both planned scaling events and unexpected pod failures, reducing operational overhead by 60% compared to external cache solutions.

The complete source code and manifests are ready for immediate deployment in any Kubernetes environmen